| Human-Robot Collaboration | Exploration and Development in

Autonomous Systems |

Intelligent Tutoring Systems and

Education Software |

Perception and Control |

Robot

Design |

Human-Robot Collaboration

| Active Inverse Reinforcement

Learning Inverse reinforcement learning addresses the general problem of recovering a reward function from samples of a policy provided by an expert/demonstrator. In this paper, we introduce active learning for inverse reinforcement learning. We propose an algorithm that allows the agent to query the demonstrator for samples at specific states, instead of relying only on samples provided at arbitrary states. The purpose of our algorithm is to estimate the reward function with similar accuracy as other methods from the literature while reducing the amount of policy samples required from the expert. We also discuss the use of our algorithm in higher dimensional problems, using both Monte Carlo and gradient methods. We present illustrative results of our algorithm in several simulated examples of different complexities. Active Learning for Reward Estimation in Inverse Reinforcement Learning,. European Conference on Machine Learning (ECML/PKDD), Bled, Slovenia, 2009. (pdf) |

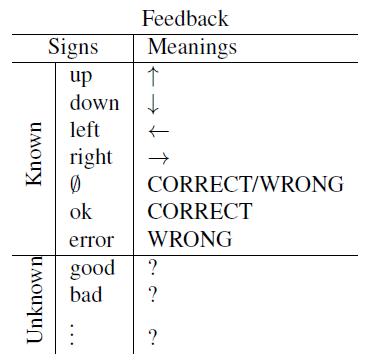

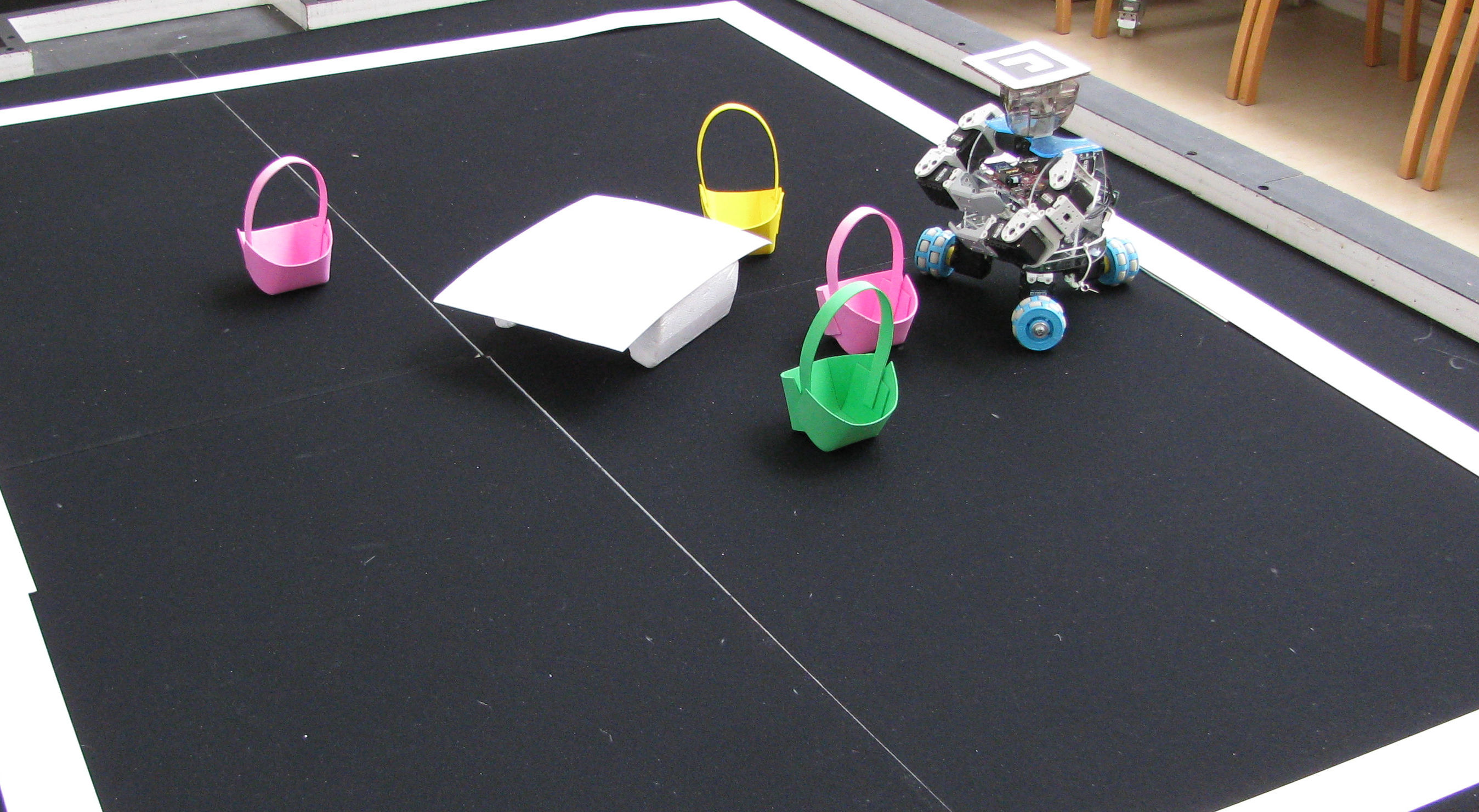

Simultaneous Acquisition of Task and Feedback Models

We present a system to learn task representations from ambiguous feedback. We consider an inverse reinforcement learner that receives feedback from a teacher with an unknown and noisy protocol. The system needs to estimate simultaneously what the task is (i.e. how to find a compact representation to the task goal), and how the teacher is providing the feedback. We further explore the problem of ambiguous protocols by considering that the words used by the teacher have an unknown relation with the action and meaning expected by the robot. This allows the system to start with a set of known signs and learn the meaning of new ones. We present computational results that show that it is possible to learn the task under a noisy and ambiguous feedback. Using an active learning approach, the system is able to reduce the length of the training period. Robot Learning Simultaneously a Task and How to Interpret Human Instructions, . ICDL-Epirob, Osaka, Japan, 2013. (pdf) Simultaneous Acquisition of Task and Feedback Models, . IEEE - International Conference on Development and Learning (ICDL), Germany, 2011. (pdf) |

Robot Self-Initiative and Personalization by Learning through Repeated Interactions

Robot Self-Initiative and Personalization by Learning through Repeated Interactions, . 6th ACM/IEEE International Conference on Human-Robot (HRI’11), Lausanne, Switzerland, 2011. (pdf) |

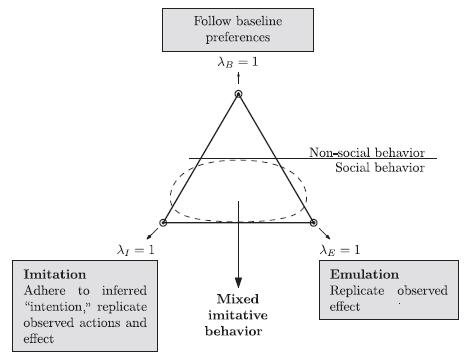

Task Inference and

Imitation Relevant publications: Lopes et al AdBeh09 (pdf) Lopes et al IROS07(pdf) |

Affordance based

imitation Affordance-based imitation learning in robots, . IEEE – Intelligent

Robotic Systems (IROS), San Diego, USA, 2007. (pdf) |

Behavioral Switching in Animals and Children

|

Reflexive imitation based

on perceptual-motor maps |

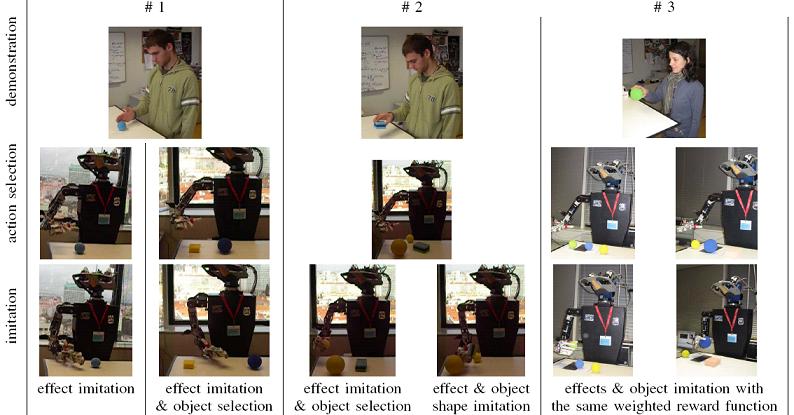

In this demo the robot is

shown a demonstration of a task. It knows how to grasp, how to

tap, and by knowing the object affordances it is able to

recognize such actions from the effects on objects. After

observing the demonstration and segment it in its components it

needs to infer the goal/intention of it. Inverse reinforcement

learning algorithms can be used as a way to infer the reward

function that better explains the observed behavior. It is

interesting that different tasks (e.g. considering or not

subgoals) are infered depending on different knowledges about

the world or on contextual restrictions (see ).

In this demo the robot is

shown a demonstration of a task. It knows how to grasp, how to

tap, and by knowing the object affordances it is able to

recognize such actions from the effects on objects. After

observing the demonstration and segment it in its components it

needs to infer the goal/intention of it. Inverse reinforcement

learning algorithms can be used as a way to infer the reward

function that better explains the observed behavior. It is

interesting that different tasks (e.g. considering or not

subgoals) are infered depending on different knowledges about

the world or on contextual restrictions (see ).

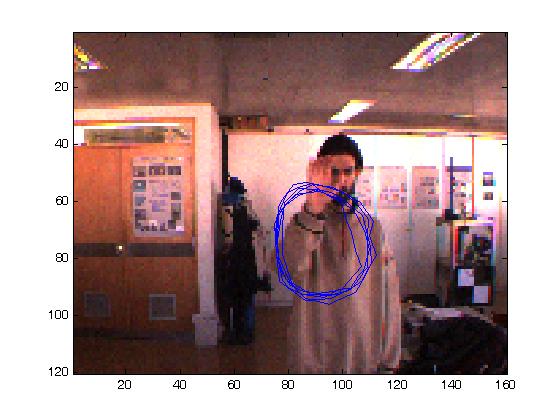

This experiment

shows Baltazar mimicking human gestures(movements). While

seeing a gesture performed by and human (

This experiment

shows Baltazar mimicking human gestures(movements). While

seeing a gesture performed by and human (