Flowers Laboratory

FLOWing Epigenetic Robots and Systems

language acquisition

ICDL-EpiRob2013: Can robots discover spoken words and their connection to human gestures?

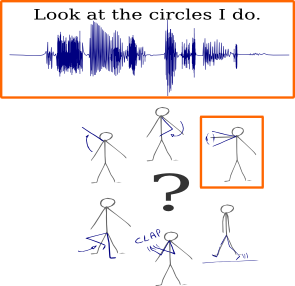

Next week, we will present our paper Learning Multimodal Semantic Components from Subsymbolic Perception Using NMF at the third joint ICDL-EpiRob conference in Osaka, Japan. In that article we demonstrate that it is possible to learn semantic concepts, each of which is both associated to a word in spoken utterances and a gesture, by only looking at correlations between subsymbolic representations of two modalities.

Next week, we will present our paper Learning Multimodal Semantic Components from Subsymbolic Perception Using NMF at the third joint ICDL-EpiRob conference in Osaka, Japan. In that article we demonstrate that it is possible to learn semantic concepts, each of which is both associated to a word in spoken utterances and a gesture, by only looking at correlations between subsymbolic representations of two modalities.

More details about the work can be found on that page.

Mangin O., Oudeyer P.Y., Learning semantic components from sub-symbolic multi-modal perception. to appear in the third Joint IEEE International Conference on Development and Learning an on Epigenetic Robotics (ICDL-EpiRob 2013), Osaka (Japan). [bibtex] [poster][code] [details]