Flowers Laboratory

FLOWing Epigenetic Robots and Systems

The emergence of multimodal concepts – defense video

The video of the PhD defense of Olivier Mangin is finally out !

The full dissertation can be found here (olivier.mangin.com/publi).

Olivier’s work focused on learning recurring patterns in multimodal perception. For that purpose he developed cognitive systems that model the mechanisms providing such capabilities to infants; a methodology that fits into the team’s field of developmental robotics.

More precisely, his thesis revolves around two main topics that are, on the one hand the ability of infants or robots to imitate and understand human behaviors, and on the other the acquisition of language. At the crossing of these topics, it studies the question of the how a developmental cognitive agent can discover a dictionary of primitive patterns from its multimodal perceptual flow. It specifies this problem and formulate its links with Quine’s indetermination of translation and blind source separation, as studied in acoustics.

The thesis sequentially studies four sub-problems and provide an experimental formulation of each of them. It then describes and tests computational models of agents solving these problems. They are particularly based on bag-of-words techniques, matrix factorization algorithms, and inverse reinforcement learning approaches.

It first goes in depth into the three separate problems of learning primitive sounds, such as phonemes or words, learning primitive dance motions, and learning primitive objective that compose complex tasks. Finally it studies the problem of learning multimodal primitive patterns, which corresponds to solve simultaneously several of the aforementioned problems. It also details how the last problems models acoustic words grounding.

Innorobo 2014

Robot phare de Flowers, Poppy acceuillait les professionnels, étudiants et passionnés sur le stand de l’INRIA. Lors de l’inauguration, Gérard Collomb, le maire de Lyon, et Bruno Bonnell, président de Syrobo (le syndicat français de la robotique de services) ont d’ailleurs eu l’occasion de faire connaissance avec Poppy et de le voir marcher tenu par la main. Démonstration qui leur évoqua quelques souvenirs du temps où leurs enfants apprenaient eux aussi à marcher !

Flowers présentait également son projet “Semi autonomous third hand” dont l’objectif est d’approfondir les problématiques de recherche essentielles pour le développement des cobots (comprendre “collaborative robots”), ces robots du futur qui partagent des tâches collaboratives avec les humains. Sur le salon, le robot américain Baxter conçu pour travailler à la chaîne et programmé par démonstration plutôt que par programmation, ou l’exosquelette Hercule de la société française RB3D sont deux autres exemples de l’émergence de la cobotique. Cet aspect de la robotique ne demande qu’à se faire une place dans le milieu industriel où le monde des hommes et celui des robots sont encore systématiquement cloisonnés par d’énormes grilles de sécurité.

A la fermeture du salon, l’heure était à la photo de famille pour bon nombre d’humanoïdes présents à Innorobo. De gauche à droite en arrière plan : Reem-C, Romeo, Nao, Aria, Poppy, et en premier plan Buddy, Robonova, Darwin et Hovis Lite.

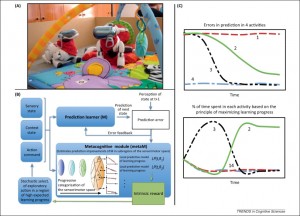

Information-seeking, curiosity, and attention: computational and neural mechanisms

We just published a milestone article on information seeking, curiosity and attention in human brains and robots, in Trends in Cognitive Science. This is the result of our ongoing collaboration with Jacqueline Gottlieb and her Cognitive Neuroscience Lab at Columbia University, NY (in the context of the Inria associated team project “Neurocuriosity”).

Information Seeking, Curiosity and Attention: Computational and Neural Mechanisms

Gottlieb, J., Oudeyer, P-Y., Lopes, M., Baranes, A. (2013)

Trends in Cognitive Science, 17(11), pp. 585-596. http://dx.doi.org/10.1016/j.tics.2013.09.001 Bibtex Pdf preprint

Abstract:

Intelligent animals devote much time and energy to exploring and obtaining information, but the underlying mechanisms are poorly understood. We review recent developments on this topic that have emerged from the traditionally separate fields of machine learning, eye movements in natural behavior, and studies of curiosity in psychology and neuroscience. These studies show that exploration may be guided by a family of mecha- nisms that range from automatic biases toward novelty or surprise to systematic searches for learning progress and information gain in curiosity-driven behavior. In addition, eye movements reflect visual information searching in multiple conditions and are amenable for cellular-level investigations. This suggests that the ocu- lomotor system is an excellent model system for under- standing information-sampling mechanisms.

Highlights

• Information-seeking can be driven by extrinsic or intrinsic rewards.

• Curiosity may result from an intrinsic desire to reduce uncertainty.

• Curiosity-driven learning is evolutionarily useful and can self-organize development.

• Eye movements can provide an excellent model system for information-seeking.

• Computational and neural approaches converge to further our understanding of information-seeking.

Robots: L’intelligence en partage, “Le Monde” parle de la robotique humanoide open-source

Dans l’édition du mercredi 4 septembre 2013 du Monde (Cahier Sciences et Médecine), un article discute de la robotique humanoide “open-source”, et en particulier de la manière dont elle catalyse les projets de recherches collaboratifs sur la cognition, le langage ou l’apprentissage.

L’article discute notamment des projets ICub (plateforme que nous avons utilisée dans le cadre du projet ANR MACSi pour modéliser l’apprentissage d’affordances dirigé par la curiosité artificielle, voir cet article), ainsi que du robot Poppy, plateforme humanoide open-source, low-cost et basée sur l’utilisation d’imprimantes 3D, que nous allons ces jours ci rendre public (voir ici un premier article).

Read more

ICDL-EpiRob2013: Can robots discover spoken words and their connection to human gestures?

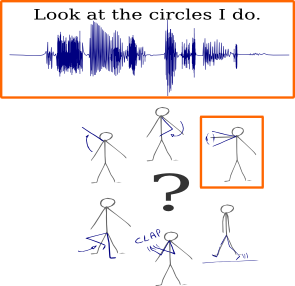

Next week, we will present our paper Learning Multimodal Semantic Components from Subsymbolic Perception Using NMF at the third joint ICDL-EpiRob conference in Osaka, Japan. In that article we demonstrate that it is possible to learn semantic concepts, each of which is both associated to a word in spoken utterances and a gesture, by only looking at correlations between subsymbolic representations of two modalities.

Next week, we will present our paper Learning Multimodal Semantic Components from Subsymbolic Perception Using NMF at the third joint ICDL-EpiRob conference in Osaka, Japan. In that article we demonstrate that it is possible to learn semantic concepts, each of which is both associated to a word in spoken utterances and a gesture, by only looking at correlations between subsymbolic representations of two modalities.

More details about the work can be found on that page.

Mangin O., Oudeyer P.Y., Learning semantic components from sub-symbolic multi-modal perception. to appear in the third Joint IEEE International Conference on Development and Learning an on Epigenetic Robotics (ICDL-EpiRob 2013), Osaka (Japan). [bibtex] [poster][code] [details]

Flowers at Maths Land Competition

Flowers team was invited by CapSciences during the handing-over of the prize of Maths Land competition for students on May 29th in the science museum’s buildings during the Mathematics Week organized by the APMEP (the Association of the Mathematics professors of Public education / l’Association des Professeurs de Mathématiques de l’Enseignement Public).

With the team taking part in the development of the great exhibition CervoRama, it was the opportunity to expose the students to the research the team is conducting, to explain the what a job in academia entails, and to exchange around the various experiments presented.

During the workshop, the goal was to make them discover what innovation is, to stimulate their curiosity by making them meet researchers and to spark their imagination by asking them to find applications with technologies they just learned about. The students were “young reporters” with the mission to realize a report about innovation. For that, they could interview three researchers and engineers working on digital innovations. At the end of the interviews, they presented their vision of innovation in a video.

Active learning of motor skills with intrinsically motivated goal babbling in robots

We have recently published an extensive article describing the SAGG-RIAC architecture, which allows efficient active learning of motor skills in high-dimensions with intrinsically motivated goal babbling in robots.

Baranes, A., Oudeyer, P-Y. (2013) Active Learning of Inverse Models with Intrinsically Motivated Goal Exploration in Robots, Robotics and Autonomous Systems, 61(1), pp. 49-73. http://dx.doi.org/10.1016/j.robot.2012.05.008.

Learning to recognize parts of choreographies with NMF

Original post: http://omangin.tumblr.com/

How to learn simple gestures from the observation of choreographies that are combinations of these gestures?

We explored this question in our paper:

We believe that this question is of scientific interest for two main reasons.

Choreographies are combinations of parallel primitive gestures

Natural human motions are highly combinatorial in many ways. Sequences of gestures such as “walk to the fridge; open the door; grab a bottle; open it; …” are a good example of the combination of simple gestures, also called primitive motions, into a complex activity. Being able to discover the parts of a complex activity and learn them, reproduce them and compose them into new activities by observation is thus an important step towards imitation learning or human behavior understanding for capability for robots and intelligent systems.

Tutorial: How to get skeleton from kinect + OpenNI through ROS ?

This tutorial goes very quickly through the concepts and steps required to be able to acquire through ROS framework, a skeleton detected by the Microsoft Kinect device and the OpenNI driver and middleware.

Motivations

Getting a working OpenNI + nite installation sounds like a nightmare to more than a few people who have tried to do it on their own. Getting a working API binding it in your favorite language might also be a difficult quest.

For these reasons a lot of people use bundled versions of OpenNI in other frameworks, which generally means that other people have taken care of fixing the OpenNI + Nite installation process and maintained a working API.

While this might be seen as adding another (useless ?) layer on top of so many abstraction layers, it is on the other hand often saving you a lot of boring work.

Finally, and this is more for roboticists, using ROS as such a framework has many advantages amongst which include:

- access to the device in any language which has ROS bindings,

- immediate and effortless access to the device over network,

- interoperability with other ROS applications, and benefits of the ROS visualization tools,

- etc.