Flowers Laboratory

FLOWing Epigenetic Robots and Systems

Publications

New PlosOne paper: Exploiting task constraints for self-calibrated brain-machine interface control using error-related potentials.

Our work on calibration-free interaction applied to Brain-Computer Interaction has been published in PlosOne.

Title: Exploiting task constraints for self-calibrated brain-machine interface control using error-related potentials.

Authors: I. Iturrate, J. Grizou, J. Omedes, P-Y. Oudeyer, M. Lopes and L. Montesano

Abstract: This paper presents a new approach for self-calibration BCI for reaching tasks using error-related potentials. The proposed method exploits task constraints to simultaneously calibrate the decoder and control the device, by using a robust likelihood function and an ad-hoc planner to cope with the large uncertainty resulting from the unknown task and decoder. The method has been evaluated in closed-loop online experiments with 8 users using a previously proposed BCI protocol for reaching tasks over a grid. The results show that it is possible to have a usable BCI control from the beginning of the experiment without any prior calibration. Furthermore, comparisons with simulations and previous results obtained using standard calibration hint that both the quality of recorded signals and the performance of the system were comparable to those obtained with a standard calibration approach.

The code for replicating our experiments can be find on github: https://github.com/flowersteam/self_calibration_BCI_plosOne_2015

The pdf of the paper is available at: https://github.com/flowersteam/self_calibration_BCI_plosOne_2015/releases/download/plosOne/iturrate2015exploiting.pdf

The emergence of multimodal concepts – defense video

The video of the PhD defense of Olivier Mangin is finally out !

The full dissertation can be found here (olivier.mangin.com/publi).

Olivier’s work focused on learning recurring patterns in multimodal perception. For that purpose he developed cognitive systems that model the mechanisms providing such capabilities to infants; a methodology that fits into the team’s field of developmental robotics.

More precisely, his thesis revolves around two main topics that are, on the one hand the ability of infants or robots to imitate and understand human behaviors, and on the other the acquisition of language. At the crossing of these topics, it studies the question of the how a developmental cognitive agent can discover a dictionary of primitive patterns from its multimodal perceptual flow. It specifies this problem and formulate its links with Quine’s indetermination of translation and blind source separation, as studied in acoustics.

The thesis sequentially studies four sub-problems and provide an experimental formulation of each of them. It then describes and tests computational models of agents solving these problems. They are particularly based on bag-of-words techniques, matrix factorization algorithms, and inverse reinforcement learning approaches.

It first goes in depth into the three separate problems of learning primitive sounds, such as phonemes or words, learning primitive dance motions, and learning primitive objective that compose complex tasks. Finally it studies the problem of learning multimodal primitive patterns, which corresponds to solve simultaneously several of the aforementioned problems. It also details how the last problems models acoustic words grounding.

AAAI-14 Calibration-Free BCI Base Control

We have a new paper accepted to the 2014 AAAI Conference on Artificial Intelligence to be held in July 2014 in Quebec, Canada. We present a method allowing a user to instruct a new task to an agent by mentally assessing the agent’s actions and without any calibration procedure. It is a joint work with Iñaki Iturrate (EPFL) and Luis Montesano (Univ. Zaragoza).

ICDL-EpiRob2013: Can robots discover spoken words and their connection to human gestures?

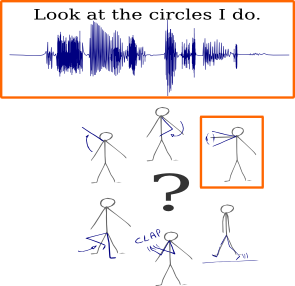

Next week, we will present our paper Learning Multimodal Semantic Components from Subsymbolic Perception Using NMF at the third joint ICDL-EpiRob conference in Osaka, Japan. In that article we demonstrate that it is possible to learn semantic concepts, each of which is both associated to a word in spoken utterances and a gesture, by only looking at correlations between subsymbolic representations of two modalities.

Next week, we will present our paper Learning Multimodal Semantic Components from Subsymbolic Perception Using NMF at the third joint ICDL-EpiRob conference in Osaka, Japan. In that article we demonstrate that it is possible to learn semantic concepts, each of which is both associated to a word in spoken utterances and a gesture, by only looking at correlations between subsymbolic representations of two modalities.

More details about the work can be found on that page.

Mangin O., Oudeyer P.Y., Learning semantic components from sub-symbolic multi-modal perception. to appear in the third Joint IEEE International Conference on Development and Learning an on Epigenetic Robotics (ICDL-EpiRob 2013), Osaka (Japan). [bibtex] [poster][code] [details]

ICDL-Epirob 2013: Learning how to learn

In a few week in Osaka, Japan, we will present our latest work on ways to enable robots to autonomously choose how they learn, in an article under the title of Autonomous Reuse of Motor Exploration Trajectories.

We decided to explore the idea of enabling a robot to modify its own learning method based on its previous experience. Human do the same; when studying, for instance when learning by heart a piece of knowledge, they explore different strategies: reading multiple times, rewriting, enunciating, visualizing, and repeating the learning sessions, or learning only once, the night before the test. Each individual eventually choose its preferred strategy, and tweak the specifics of it has it is reused, often based on its perceived effectiveness. Humans learn how to learn.

In our work, we focused on how a robot could improve the way it explore a new, unknown task. The exploration strategy is a important factor the learning effectiveness; work on intrinsic motivation by our team demonstrated that. And autonomous robots, while potentially subjected to very diverse situations, retain a constant morphology: their kinematics and dynamics remain stable, and their motor space, the set of possible motor commands, stays the same. The hypothesis we made was that some exploration strategies are a priori more effective for a given robot, and that those strategies can be uncovered by analyzing past learning experience, that is, past exploration trajectories. Using those exploration strategies would lead to increase in learning performance, compared to random ones.

Our experiment confirmed that. We identified, through autonomous empirical measurement, the motor commands of a first task that belonged to areas where learning had been the most effective, and then reused them on a similar, different tasks, where the robot didn’t have access the the learning experience of the first task. The early learning performance increased significantly. Our methods only requires that the motor space stays the same, the sensory space can be arbitrarily different (hence making possible to reuse an exploration strategy to learn in another modality), and doesn’t make assumptions about the learning algorithms used, which can even differ between tasks.

The article is available here here.

We released the code used to run the experiments, so that anyone can reproduce and analyze them. You can access it here

Reference: Fabien Benureau, Pierre-Yves Oudeyer, “Autonomous Reuse of Motor Exploration Trajectories“, in the proceedings of ICDL-Epirob 2013, Osaka, Japan.

ICDL-Epirob 2013: Robot Learning Simultaneously a Task and How to Interpret Human Instructions

Can a robot learn a new task if the task is unknown and the user is providing unknown instructions ?

We explored this question in our paper: Robot Learning Simultanously a Task and How to Interpret Human Instructions. To appear in Joint IEEE International Conference on Development and Learning an on Epigenetic Robotics (ICDL-EpiRob), Osaka : Japan (2013)

In this paper we present an algorithm to bootstrap shared understanding in a human-robot interaction scenario where the user teaches a robot a new task using teaching instructions yet unknown to it. In such cases, the robot needs to estimate simultaneously what the task is and the associated meaning of instructions received from the user. For this work, we consider a scenario where a human teacher uses initially unknown spoken words, whose associated unknown meaning is either a feedback (good/bad) or a guidance (go left, right, …). We present computational results, within an inverse reinforcement learning framework, showing that a) it is possible to learn the meaning of unknown and noisy teaching instructions, as well as a new task at the same time, b) it is possible to reuse the acquired knowledge about instructions for learning new tasks, and c) even if the robot initially knows some of the instructions’ meanings, the use of extra unknown teaching instructions improves learning efficiency.

Learn more from my webpage: https://flowers.inria.fr/jgrizou/

Learning to recognize parts of choreographies with NMF

Original post: http://omangin.tumblr.com/

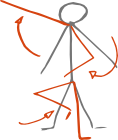

How to learn simple gestures from the observation of choreographies that are combinations of these gestures?

We explored this question in our paper:

We believe that this question is of scientific interest for two main reasons.

Choreographies are combinations of parallel primitive gestures

Natural human motions are highly combinatorial in many ways. Sequences of gestures such as “walk to the fridge; open the door; grab a bottle; open it; …” are a good example of the combination of simple gestures, also called primitive motions, into a complex activity. Being able to discover the parts of a complex activity and learn them, reproduce them and compose them into new activities by observation is thus an important step towards imitation learning or human behavior understanding for capability for robots and intelligent systems.