Flowers Laboratory

FLOWing Epigenetic Robots and Systems

Uncategorized

Calibration-Free Human-Machine Interfaces – Thesis Defense

Jonathan Grizou defended his thesis entitled Learning From Unlabeled Interaction Frames on October 24, 2014.

The video, slides, and thesis manuscript can be found at this link: http://jgrizou.com/projects/thesis_defense/

Keywords: Learning from Interaction, Human-Robot Interaction, Brain-Computer Interfaces, Intuitive and Flexible Interaction, Robotics, Symbol Acquisition, Active Learning, Calibration.

Abstract: This thesis investigates how a machine can be taught a new task from unlabeled human instructions, which is without knowing beforehand how to associate the human communicative signals with their meanings. The theoretical and empirical work presented in this thesis provides means to create calibration free interactive systems, which allow humans to interact with machines, from scratch, using their own preferred teaching signals. It therefore removes the need for an expert to tune the system for each specific user, which constitutes an important step towards flexible personalized teaching interfaces, a key for the future of personal robotics.

Our approach assumes the robot has access to a limited set of task hypotheses, which include the task the user wants to solve. Our method consists of generating interpretation hypotheses of the teaching signals with respect to each hypothetic task. By building a set of hypothetic interpretation, i.e. a set of signal-label pairs for each task, the task the user wants to solve is the one that explains better the history of interaction.

We consider different scenarios, including a pick and place robotics experiment with speech as the modality of interaction, and a navigation task in a brain computer interaction scenario. In these scenarios, a teacher instructs a robot to perform a new task using initially unclassified signals, whose associated meaning can be a feedback (correct/incorrect) or a guidance (go left, right, up, \ldots). Our results show that a) it is possible to learn the meaning of unlabeled and noisy teaching signals, as well as a new task at the same time, and b) it is possible to reuse the acquired knowledge about the teaching signals for learning new tasks faster. We further introduce a planning strategy that exploits uncertainty from the task and the signals’ meanings to allow more efficient learning sessions. We present a study where several real human subjects control successfully a virtual device using their brain and without relying on a calibration phase. Our system identifies, from scratch, the target intended by the user as well as the decoder of brain signals.

Based on this work, but from another perspective, we introduce a new experimental setup to study how humans behave in asymmetric collaborative tasks. In this setup, two humans have to collaborate to solve a task but the channels of communication they can use are constrained and force them to invent and agree on a shared interaction protocol in order to solve the task. These constraints allow analyzing how a communication protocol is progressively established through the interplay and history of individual actions.

UAI-14 Interactive Learning from Unlabeled Instructions

We have a new paper accepted to the 2014 Conference on Uncertainty in Artificial Intelligence (UAI) to be held in July 2014 in Quebec, Canada. It is a joint work with Iñaki Iturrate (EPFL) and Luis Montesano (Univ. Zaragoza).

Abstract: Interactive learning deals with the problem of learning and solving tasks using human instructions. It is common in human-robot interaction, tutoring systems, and in human-computer interfaces such as brain-computer ones. In most cases, learning these tasks is possible because the signals are predefined or an ad-hoc calibration procedure allows to map signals to specific meanings. In this paper, we address the problem of simultaneously solving a task under human feedback and learning the associated meanings of the feedback signals. This has important practical application since the user can start controlling a device from scratch, without the need of an expert to define the meaning of signals or carrying out a calibration phase. The paper proposes an algorithm that simultaneously assign meanings to signals while solving a sequential task under the assumption that both, human and machine, share the same a priori on the possible instruction meanings and the possible tasks. Furthermore, we show using synthetic and real EEG data from a brain-computer interface that taking into account the uncertainty of the task and the signal is necessary for the machine to actively plan how to solve the task efficiently.

Tour of the Web of February

Each month, I collect interesting stories related to the scientific interest of the team, in science, robotics, and education. Here are the highlights of February.

Hard Science

Scientists have observed a curious trend that seems to affect their research. They first publish a result about a brand new observed effect, that they conclusively prove. Then other studies of the phenomenon come from other scientists, largely corroborating the new idea. But then, the effect observed looses in strength as the years and studies pile on. The authors of the original research themselves find themselves unable to replicate their original findings, despite the apparent absence of errors in methodology in the original article. Jonathan Schooler, victim of the phenomenon, termed the effect “cosmic habituation”, as if nature was habituating to scientist ideas over time. This draw a somber and sobering picture of the effect the scientist has on its data.

New Yorker article:

http://www.newyorker.com/reporting/2010/12/13/101213fa_fact_lehrer?currentPage=all

The problem of bad published results is beginning to dawn enough on scientists that some of them are organising. John Ioannidis in particular, author of the 2005 article “Why most published research findings are false”, worked to develop meta-research, or research about research, and is launching the Meta-Research Innovation Centre at Stanford next month.

The Economist article:

http://www.economist.com/news/science-and-technology/21598944-sloppy-researchers-beware-new-institute-has-you-its-sights-metaphysicians

Journal article “Why most published research findings are false”:

http://www.plosmedicine.org/article/info%3Adoi%2F10.1371%2Fjournal.pmed.0020124

No Science Left Behind

The New York Times and Nature are running a piece on how American science is increasingly financed by wealthy individuals, in a background of budget cuts. The money is usually aimed at trendy subjects, which could skew scientific research to the detriment of less sexy fields and basic research, which prompted Nature to publish a warning in that sense.

New York Time article:

http://www.nytimes.com/2014/03/16/science/billionaires-with-big-ideas-are-privatizing-american-science.html

Nature article:

http://www.nature.com/neuro/journal/v11/n10/full/nn1008-1117.html

Concurrently, the Brain initiative got a big boost in public financing from (announced by) Obama, from $100 millions to $200 millions. The project scientific committee also published a report with a more detailed list of the goals of the initiative.

White House press release:

http://www.whitehouse.gov/sites/default/files/microsites/ostp/FY%202015%20BRAIN.pdf

Executive summary (6 pages):

http://acd.od.nih.gov/presentations/BRAIN-Interim-Report-Executive-Summary.pdf

Education

Math “is fundamentally about patterns and structures, rather than “little manipulations of numbers,”” says math educator Maria Droujkov. But with the way the mathematics are taught, she claims, it is hard to get that, and the mechanical drills that are imposed on us from an early age often prevents children from enjoying mathematics for life. Droujkov defends the idea of going from “simple but hard” activities (rote learning of multiplication tables) to “complex but easy” ones (legos or snowflakes cut-outs to learn symmetry). Well worth the read.

The Atlantic article:

http://www.theatlantic.com/education/archive/2014/03/5-year-olds-can-learn-calculus/284124/

The Computing Research Association has published a sneak preview of its 2013 Taulbee Report, to be published in May in CRN, which looks at enrolment figure in CS for the US. The most notable part is the 22% increase in BS enrolment year-over-year.

Press release:

http://cra.org/resources/crn-online-view/2013_taulbee_report_sneak_preview/

The robot is coming from the US, but the – open source – software suite that powers it is French. And it is in a the lycée la Martinière Monplaisir, in Lyon, that it is being deployed. This telepresence robot is aimed at students unable to be physically present for temporary periods. It makes interacting in class possible, with the teacher and other students, through what they call, tongue-in-cheek, the “robodylanguage”. (shared by Nicolas Jahier)

LyonMag article (in french):

http://www.lyonmag.com/article/61805/le-premier-robot-lyceen-a-ete-presente-a-lyon

Video from FranceTVInfo (in french):

http://www.francetvinfo.fr/societe/education/video-un-robot-lyceen-va-en-cours-a-la-place-des-eleves_511097.html

Musician Cyborg

Science meets art, and creates new things. Gil Weinberg of the Georgia Tech Center for Music Technology (which he founded), created (more probably, supervised the creation of) a robotic prothesis that uses electromyography to control a first drumstick. A second drumstick is also present on the prothesis, but is controlled algorithmically. For the moment, the second drumstick is a on an open loop, with the drummer, Jason Barnes, being the close loop by responding to the rhythms of the second sticks. In the perspective, synchronisation routines and machine learning are envisioned to create a more reactive, yet autonomous second stick.

Video:

http://www.youtube.com/watch?v=ntrlHw6f4E4

Press release:

http://www.gtcmt.gatech.edu/news/robotic-prosthesis-turns-drummer-into-a-three-armed-cyborg

Robots are less impressed by a three-drumsticks drummer. After all, coming from Japan with a 22-arms drummer and 78-fingers guitar player, they are showing off their ability to handle arbitrarily many way of independence. Squarepusher, the composer, is pushing the notion that for music to be emotionally powerful, it doesn’t necessarily have to be performed by humans. (shared by Clément Moulin-Frier)

Pitchfork short story (with video):

http://pitchfork.com/news/53991-squarepusher-collaborates-with-robots-on-new-ep/

Innorobo 2014

Robot phare de Flowers, Poppy acceuillait les professionnels, étudiants et passionnés sur le stand de l’INRIA. Lors de l’inauguration, Gérard Collomb, le maire de Lyon, et Bruno Bonnell, président de Syrobo (le syndicat français de la robotique de services) ont d’ailleurs eu l’occasion de faire connaissance avec Poppy et de le voir marcher tenu par la main. Démonstration qui leur évoqua quelques souvenirs du temps où leurs enfants apprenaient eux aussi à marcher !

Flowers présentait également son projet “Semi autonomous third hand” dont l’objectif est d’approfondir les problématiques de recherche essentielles pour le développement des cobots (comprendre “collaborative robots”), ces robots du futur qui partagent des tâches collaboratives avec les humains. Sur le salon, le robot américain Baxter conçu pour travailler à la chaîne et programmé par démonstration plutôt que par programmation, ou l’exosquelette Hercule de la société française RB3D sont deux autres exemples de l’émergence de la cobotique. Cet aspect de la robotique ne demande qu’à se faire une place dans le milieu industriel où le monde des hommes et celui des robots sont encore systématiquement cloisonnés par d’énormes grilles de sécurité.

A la fermeture du salon, l’heure était à la photo de famille pour bon nombre d’humanoïdes présents à Innorobo. De gauche à droite en arrière plan : Reem-C, Romeo, Nao, Aria, Poppy, et en premier plan Buddy, Robonova, Darwin et Hovis Lite.

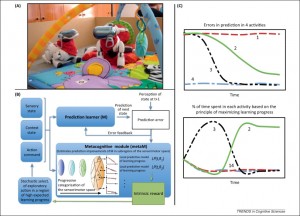

Information-seeking, curiosity, and attention: computational and neural mechanisms

We just published a milestone article on information seeking, curiosity and attention in human brains and robots, in Trends in Cognitive Science. This is the result of our ongoing collaboration with Jacqueline Gottlieb and her Cognitive Neuroscience Lab at Columbia University, NY (in the context of the Inria associated team project “Neurocuriosity”).

Information Seeking, Curiosity and Attention: Computational and Neural Mechanisms

Gottlieb, J., Oudeyer, P-Y., Lopes, M., Baranes, A. (2013)

Trends in Cognitive Science, 17(11), pp. 585-596. http://dx.doi.org/10.1016/j.tics.2013.09.001 Bibtex Pdf preprint

Abstract:

Intelligent animals devote much time and energy to exploring and obtaining information, but the underlying mechanisms are poorly understood. We review recent developments on this topic that have emerged from the traditionally separate fields of machine learning, eye movements in natural behavior, and studies of curiosity in psychology and neuroscience. These studies show that exploration may be guided by a family of mecha- nisms that range from automatic biases toward novelty or surprise to systematic searches for learning progress and information gain in curiosity-driven behavior. In addition, eye movements reflect visual information searching in multiple conditions and are amenable for cellular-level investigations. This suggests that the ocu- lomotor system is an excellent model system for under- standing information-sampling mechanisms.

Highlights

• Information-seeking can be driven by extrinsic or intrinsic rewards.

• Curiosity may result from an intrinsic desire to reduce uncertainty.

• Curiosity-driven learning is evolutionarily useful and can self-organize development.

• Eye movements can provide an excellent model system for information-seeking.

• Computational and neural approaches converge to further our understanding of information-seeking.

New paper about the Poppy platform accepted for Humanoids2013

A new scientific paper has been accepted for the Humanoids 2013 Conference which will be held in Atlanta. Our work will be presented thursday the 17th October from 11:30 to 12:30 during the interactive session.

After the description of the design done in the previous paper, we decided to conduct experiments to evaluate the real effect of the thigh shape of Poppy for the biped locomotion. To do so, the current design of the leg is compare with a more classic approach involving straight thigh… Read more

IROS

It’s our pleasure to announce that Poppy will be presented in the next IROS conference.

11:45-12:00, Paper MoAT9.4

Neuro Robotics

The associated paper mainly describes the conception of the Poppy’s legs.

Robots: L’intelligence en partage, “Le Monde” parle de la robotique humanoide open-source

Dans l’édition du mercredi 4 septembre 2013 du Monde (Cahier Sciences et Médecine), un article discute de la robotique humanoide “open-source”, et en particulier de la manière dont elle catalyse les projets de recherches collaboratifs sur la cognition, le langage ou l’apprentissage.

L’article discute notamment des projets ICub (plateforme que nous avons utilisée dans le cadre du projet ANR MACSi pour modéliser l’apprentissage d’affordances dirigé par la curiosité artificielle, voir cet article), ainsi que du robot Poppy, plateforme humanoide open-source, low-cost et basée sur l’utilisation d’imprimantes 3D, que nous allons ces jours ci rendre public (voir ici un premier article).

Read more

Meet us @ ICDL-Epirob 2013

Five members of the Flowers Team will participate to the Thrid Joint IEE International Conference on Developmental and Learning and on Epigenetic Robotics. The conference takes place in Osaka, Japan, August 18-22.

You will meet there Fabien Benureau, Jonathan Grizou, Olivier Mangin, Clément Moulin-Frier, and Mai Nguyen. They will be happy to discuss about the latest research and future projects of the team.