Flowers Laboratory

FLOWing Epigenetic Robots and Systems

Blog

IROS

It’s our pleasure to announce that Poppy will be presented in the next IROS conference.

11:45-12:00, Paper MoAT9.4

Neuro Robotics

The associated paper mainly describes the conception of the Poppy’s legs.

Robots: L’intelligence en partage, “Le Monde” parle de la robotique humanoide open-source

Dans l’édition du mercredi 4 septembre 2013 du Monde (Cahier Sciences et Médecine), un article discute de la robotique humanoide “open-source”, et en particulier de la manière dont elle catalyse les projets de recherches collaboratifs sur la cognition, le langage ou l’apprentissage.

L’article discute notamment des projets ICub (plateforme que nous avons utilisée dans le cadre du projet ANR MACSi pour modéliser l’apprentissage d’affordances dirigé par la curiosité artificielle, voir cet article), ainsi que du robot Poppy, plateforme humanoide open-source, low-cost et basée sur l’utilisation d’imprimantes 3D, que nous allons ces jours ci rendre public (voir ici un premier article).

Read more

Meet us @ ICDL-Epirob 2013

Five members of the Flowers Team will participate to the Thrid Joint IEE International Conference on Developmental and Learning and on Epigenetic Robotics. The conference takes place in Osaka, Japan, August 18-22.

You will meet there Fabien Benureau, Jonathan Grizou, Olivier Mangin, Clément Moulin-Frier, and Mai Nguyen. They will be happy to discuss about the latest research and future projects of the team.

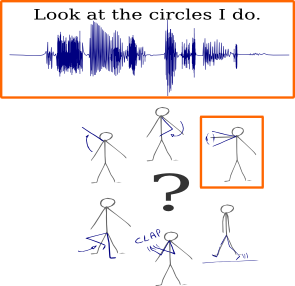

ICDL-EpiRob2013: Can robots discover spoken words and their connection to human gestures?

Next week, we will present our paper Learning Multimodal Semantic Components from Subsymbolic Perception Using NMF at the third joint ICDL-EpiRob conference in Osaka, Japan. In that article we demonstrate that it is possible to learn semantic concepts, each of which is both associated to a word in spoken utterances and a gesture, by only looking at correlations between subsymbolic representations of two modalities.

Next week, we will present our paper Learning Multimodal Semantic Components from Subsymbolic Perception Using NMF at the third joint ICDL-EpiRob conference in Osaka, Japan. In that article we demonstrate that it is possible to learn semantic concepts, each of which is both associated to a word in spoken utterances and a gesture, by only looking at correlations between subsymbolic representations of two modalities.

More details about the work can be found on that page.

Mangin O., Oudeyer P.Y., Learning semantic components from sub-symbolic multi-modal perception. to appear in the third Joint IEEE International Conference on Development and Learning an on Epigenetic Robotics (ICDL-EpiRob 2013), Osaka (Japan). [bibtex] [poster][code] [details]

ICDL-Epirob 2013: Learning how to learn

In a few week in Osaka, Japan, we will present our latest work on ways to enable robots to autonomously choose how they learn, in an article under the title of Autonomous Reuse of Motor Exploration Trajectories.

We decided to explore the idea of enabling a robot to modify its own learning method based on its previous experience. Human do the same; when studying, for instance when learning by heart a piece of knowledge, they explore different strategies: reading multiple times, rewriting, enunciating, visualizing, and repeating the learning sessions, or learning only once, the night before the test. Each individual eventually choose its preferred strategy, and tweak the specifics of it has it is reused, often based on its perceived effectiveness. Humans learn how to learn.

In our work, we focused on how a robot could improve the way it explore a new, unknown task. The exploration strategy is a important factor the learning effectiveness; work on intrinsic motivation by our team demonstrated that. And autonomous robots, while potentially subjected to very diverse situations, retain a constant morphology: their kinematics and dynamics remain stable, and their motor space, the set of possible motor commands, stays the same. The hypothesis we made was that some exploration strategies are a priori more effective for a given robot, and that those strategies can be uncovered by analyzing past learning experience, that is, past exploration trajectories. Using those exploration strategies would lead to increase in learning performance, compared to random ones.

Our experiment confirmed that. We identified, through autonomous empirical measurement, the motor commands of a first task that belonged to areas where learning had been the most effective, and then reused them on a similar, different tasks, where the robot didn’t have access the the learning experience of the first task. The early learning performance increased significantly. Our methods only requires that the motor space stays the same, the sensory space can be arbitrarily different (hence making possible to reuse an exploration strategy to learn in another modality), and doesn’t make assumptions about the learning algorithms used, which can even differ between tasks.

The article is available here here.

We released the code used to run the experiments, so that anyone can reproduce and analyze them. You can access it here

Reference: Fabien Benureau, Pierre-Yves Oudeyer, “Autonomous Reuse of Motor Exploration Trajectories“, in the proceedings of ICDL-Epirob 2013, Osaka, Japan.

ICDL-Epirob 2013: Robot Learning Simultaneously a Task and How to Interpret Human Instructions

Can a robot learn a new task if the task is unknown and the user is providing unknown instructions ?

We explored this question in our paper: Robot Learning Simultanously a Task and How to Interpret Human Instructions. To appear in Joint IEEE International Conference on Development and Learning an on Epigenetic Robotics (ICDL-EpiRob), Osaka : Japan (2013)

In this paper we present an algorithm to bootstrap shared understanding in a human-robot interaction scenario where the user teaches a robot a new task using teaching instructions yet unknown to it. In such cases, the robot needs to estimate simultaneously what the task is and the associated meaning of instructions received from the user. For this work, we consider a scenario where a human teacher uses initially unknown spoken words, whose associated unknown meaning is either a feedback (good/bad) or a guidance (go left, right, …). We present computational results, within an inverse reinforcement learning framework, showing that a) it is possible to learn the meaning of unknown and noisy teaching instructions, as well as a new task at the same time, b) it is possible to reuse the acquired knowledge about instructions for learning new tasks, and c) even if the robot initially knows some of the instructions’ meanings, the use of extra unknown teaching instructions improves learning efficiency.

Learn more from my webpage: https://flowers.inria.fr/jgrizou/

Flowers at Maths Land Competition

Flowers team was invited by CapSciences during the handing-over of the prize of Maths Land competition for students on May 29th in the science museum’s buildings during the Mathematics Week organized by the APMEP (the Association of the Mathematics professors of Public education / l’Association des Professeurs de Mathématiques de l’Enseignement Public).

With the team taking part in the development of the great exhibition CervoRama, it was the opportunity to expose the students to the research the team is conducting, to explain the what a job in academia entails, and to exchange around the various experiments presented.

During the workshop, the goal was to make them discover what innovation is, to stimulate their curiosity by making them meet researchers and to spark their imagination by asking them to find applications with technologies they just learned about. The students were “young reporters” with the mission to realize a report about innovation. For that, they could interview three researchers and engineers working on digital innovations. At the end of the interviews, they presented their vision of innovation in a video.

King-Sun Fu Best Paper Award

At ICRA 2013, Freek Stulp was handed the “King-Sun Fu Best Paper Award of the IEEE Transactions on Robotics for the year 2012” for the paper “Reinforcement Learning with Sequences of Motion Primitives for Robust Manipulation — Freek Stulp, Evangelos Theodorou, and Stefan Schaal”. IEEE T-RO is one of the highest impact journals in robotics, and we are especially honored because this is the first time this award has been given to a paper on machine learning.

Active learning of motor skills with intrinsically motivated goal babbling in robots

We have recently published an extensive article describing the SAGG-RIAC architecture, which allows efficient active learning of motor skills in high-dimensions with intrinsically motivated goal babbling in robots.

Baranes, A., Oudeyer, P-Y. (2013) Active Learning of Inverse Models with Intrinsically Motivated Goal Exploration in Robots, Robotics and Autonomous Systems, 61(1), pp. 49-73. http://dx.doi.org/10.1016/j.robot.2012.05.008.

Learning to recognize parts of choreographies with NMF

Original post: http://omangin.tumblr.com/

How to learn simple gestures from the observation of choreographies that are combinations of these gestures?

We explored this question in our paper:

We believe that this question is of scientific interest for two main reasons.

Choreographies are combinations of parallel primitive gestures

Natural human motions are highly combinatorial in many ways. Sequences of gestures such as “walk to the fridge; open the door; grab a bottle; open it; …” are a good example of the combination of simple gestures, also called primitive motions, into a complex activity. Being able to discover the parts of a complex activity and learn them, reproduce them and compose them into new activities by observation is thus an important step towards imitation learning or human behavior understanding for capability for robots and intelligent systems.